Analog Chip May Be Key to Unlocking AI Power

New tools power faster, greener artificial intelligence for devices and health care

OXFORD, Miss. – Most computers use digital systems that separate memory, where data is stored, and processing, where it is used. This setup wastes energy because the computer must constantly move data back and forth, but a University of Mississippi professor is working to develop a more efficient alternative.

Sakib Hasan, an Ole Miss assistant professor of electrical and computer engineering, and Tamzidul Hoque, an assistant professor at the University of Kansas, are working on a system called analog in-memory computing. It lets computers process analog data right where it is stored, kind of like thinking and remembering in the same place. This saves energy and boosts computing speed.

"Scientists have been inspired by the human brain, so why not make a computer with a different architecture where the processor and memory are co-located?" Hasan said. "That's the motivation behind in-memory computing, and why it's so critical today."

The technology could have broad implications for the emerging development of artificial intelligence systems.

In 2020, the information and communication technologies sector accounted for an estimated 1.8% and 2.8% of global greenhouse gas emissions, according to data compiled by the University of Cambridge. That is more than aviation, which comes in at 1.9%.

"The carbon footprint of computation has been going up exponentially, especially with big data and the AI revolution," Hasan said. "If you make it analog, you can save orders of more energy."

The researchers said they do not expect to replace digital computers; they want to augment them.

"Analog systems use continuous signals, like a smooth wave, while digital systems break everything down into 0s and 1s," Hasan said. "Since the world around us is analog, we need special converters to translate those signals into digital form so computers can understand them.

"Digital processing typically requires a lot more transistors than analog, but it's more reliable and better at handling noise, which is why most chips today are digital, even though analog can be more efficient. Our goal is to create a tool that helps design analog AI chips that are both efficient and reliable, combining the best of both worlds."

"The digital processor will handle most tasks, but for heavy AI workloads, it will call on the analog chip, an accelerator or co-processor, to do the job more efficiently," Hasan said.

Most artificial intelligence applications run on powerful servers because phones or small devices cannot handle the complexity, Hoque said. However, developers want to incorporate AI in things such as drones to detect fires or in smartwatches to monitor health.

For that, faster, more efficient hardware is needed that can work locally and use less power, he said.

Analysts expect global demand for data center capacity to grow by 19% to 22% annually through 2030, management consulting firm McKinsey & Co. reports. That rate could triple today's demand and potentially require more capacity than has been built in the past two decades in just five years.

Much of that demand will be driven by the adoption of AI, particularly large models with massive computing and storage needs, the group reports.

Without major innovations such as in-memory computing and hardware-efficient AI, this growth will outgrow our ability to supply clean, scalable and affordable infrastructure, Hoque said.

"We've developed a design and simulation framework that allows us to accurately test complex in-memory circuits," he said. "It tells us how much power they'll use, how fast they'll be and how much space they'll take before we manufacture the chip."

"In our everyday life, this will help us create better AI systems, and we'll start seeing applications of AI in new areas we can't even imagine right now," he said. "For example, in the future, we might have AI-capable chips implanted in the human body for health purposes.

"That's extremely challenging today, but as we make AI more efficient, that becomes achievable."

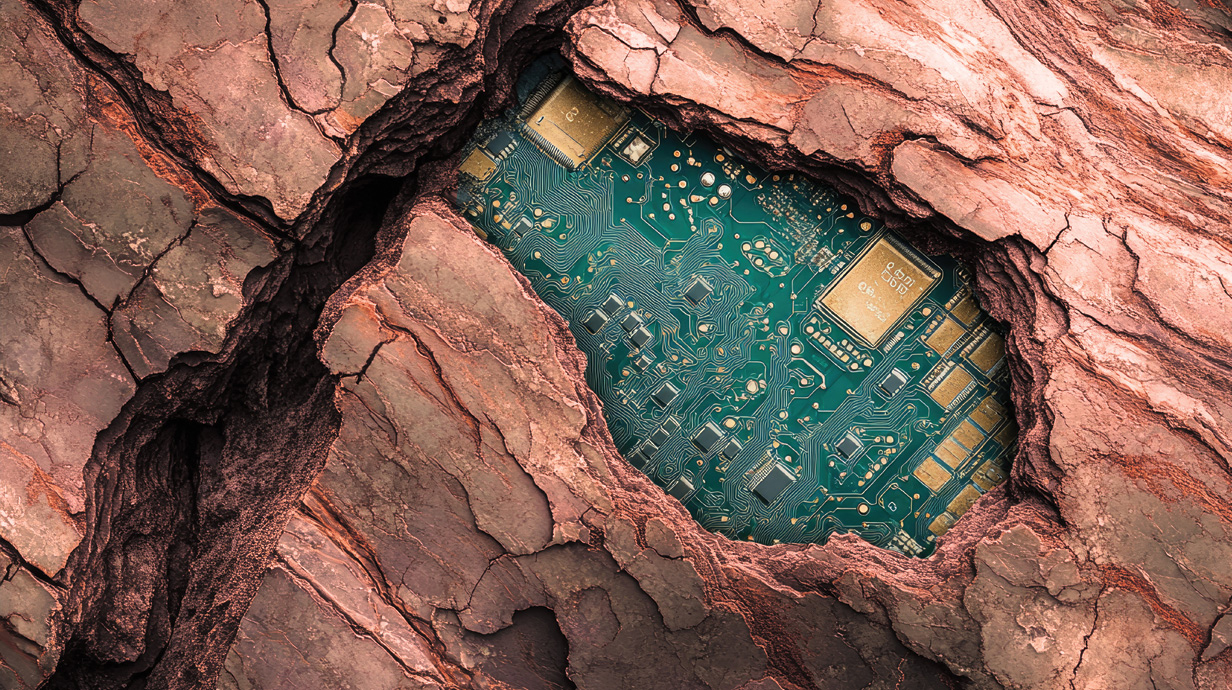

Top: Blending analog data handling with digital processing may help computer engineers build computers that operate faster and more efficiently, a critical consideration for emerging artificial intelligence systems. Such systems could use considerably less energy than existing machines, an Ole Miss engineering professor says. Photo illustration by Stefanie Goodwiller/University Marketing and Communications

By

Jordan Karnbach

Campus

Office, Department or Center

Published

July 23, 2025